The previous post described the extended Kalman filter. This post explains how to create a ROS package that implements an extended Kalman filter, which can be used for sensor fusion. The sensor data that will be fused together comes from a robots inertial measurement unit (imu), rotary encoders (wheel odometry) and vision sensors (camera). The ekf package that is developed in this post will be used to compare the sensor data and apply sensor fusion to estimate the pose of the robot as it moves around. To achieve this, the following packages are used.

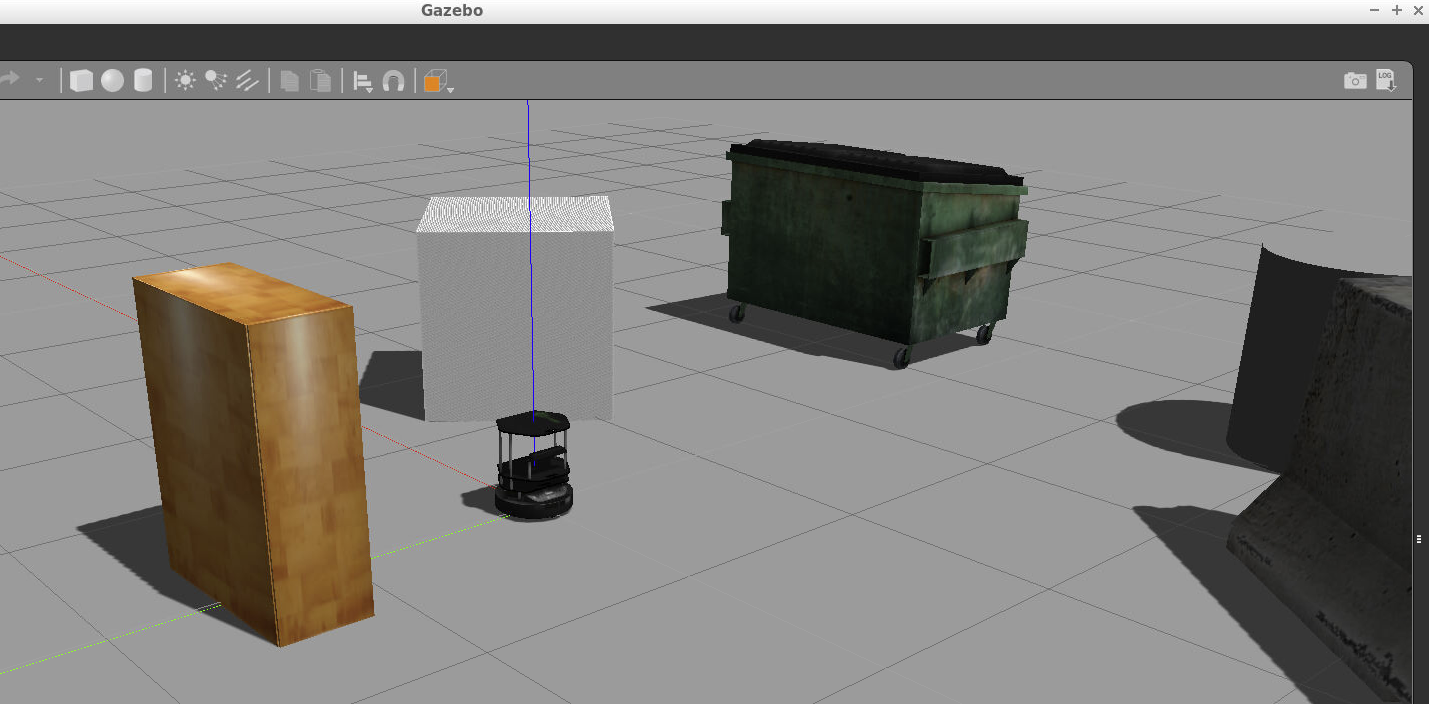

- turtlebot_gazebo launches a mobile robot in the gazebo environment.

- robot_pose_ekf estimates the position and orientation of the robot.

- odom_to_trajectory append the odometry values generated over time into a trajectory path.

- turtlebot_teleop allows to steer the robot using keyboard commands.

- rviz lets us visualize the estimated position and orientation of the robot.

This post refers to the ROS documentation and makes use of the publisher and subscriber nodes which publish and subscribe messages to topics of ROS. It explains how to change the mentioned packages to integrate these nodes into a single launch file.

With this launch file it is possible to launch the entire environment.

Mobile Robot Sensors

The following section starts off with some basics about the sensors of the mobil robot and shows that each has its disadvantages. To account for these, the sensor readings of each sensor are combined using sensor fusion with the EKF. This is done by taking the noisy measurements, compare them, filter the noise, removie the uncertainties as much as possible and provide a good estimate of the robot’s pose.

Inertial Measurement Unit

An intertial measurement unit (imu) usually consists of a gyroscope (3DOF) and a accelerometer (3DOF) and can have a magnetometer (3DOF) as well. Each of these elements of the imu has three degrees of fredom (3DOF) which results in 9DOF in total. The imu can measure the linear velocity and the position using the accelerometer by integration

\[\begin{align} v(t_1) &= v(t_0) + \int_{t_0}^{t_1} a(t) dt \\ x(t_1) &= x(t_0) + \int_{t_0}^{t_1} v(t) dt \\ \end{align}\]Taking the double integral results in a noisy position. This error is accumulated even more over time and makes it necessary to check the drift or error parameters over time.

The same is done to find the orientation, by integrating the angular velocity provided by the imu.

Rotary Encoders

This type of sensors are attached to the robots actuated wheels to measure the velocity and position of the wheels. To estimate the robots position, the integral of the velocity is calculated. However, the robot wheel might be stuck between obstacles or slip on the ground because the robot might be stuck against a wall. In this case the position won’t be calculated correctly. It is furthermore important to check an encoders resolution. An encoder with low resolution is highly sensitive to slippage.

Vision Sensor

A vision sensor can be an rgb 2D camera or even an rgbd camera that can sense the depth of its environment. The robot is then able to senese the depth towards an obstacle which can be translated to a position. The drawbacks of cameras are present in low light environments which would require other sensors, such as radar or lidar. It is also important to look at the field of view (FOV) of the vision system and take the smalles range of operation into account.

The ROS Packages

In the next sections the single packages are explained. To follow the instructions, a ROS catkin workspace needs to be initialized, which allows to hold the packages.

mkdir -p /home/workspace/catkin_ws/src

cd /home/workspace/catkin_ws/src

catkin_init_workspace

cd ..

catkin_makeThe catkin_make command is a convenience tool for working with catkin workspaces. Running it the first time in your workspace, it will create a CMakeLists.txt symlink in your src folder.

This link points to a template CMakeLists.txt ROS provides. Calling catkin_make will also build any packages located in ~/catkin_ws/src.

TurtleBot Gazebo Package

TurtleBot is not only a ROS package but also a real robot which is used extensively in ROS for localization, mapping and path planning. At the time of writing there exist three different versions of the TurtleBot:

- The TurtleBot1 with an iRobot Create Base (2010)

- TurtleBot2 with the Kobuki Base (2012)

- TurtleBot3 family (2017)

The TurtleBot platforms follow the REP 119 specification which is part of the ROS Enhancement Proposals (REP) Here, TurtleBot2 is deployed in a gazebo environment and estimate its pose. This is done by using two of its onboard sensors, which consist of rotary encoders, imu and a rgbd camera.

To obtain the list of subscriber and publisher topics we obtain the turtlebot package from ROS with the following terminal commands. This will clone the package into the catkin workspace

Clone the package

New packages from external repositories such as github are conventionally placed into the src folder of your catkin workspace.

cd /home/workspace/catkin_ws/src

git clone https://github.com/turtlebot/turtlebot_simulatorInstall the dependencies

Dependencies for a ROS package can be installed using rosdep which is the dependency tool of ROS.

cd /home/workspace/catkin_ws

source devel/setup.bash

rosdep -i install turtlebot_gazeboit clone https://github.com/turtlebot/turtlebot_simulatorOutput

#All required rosdeps installed successfullyThe source command is required to overlay this workspace on top of your environment. To understand more about this see the general catkin documentation.

Build package and source the workspace

catkin_make

source devel/setup.bashLaunch the nodes

roslaunch turtlebot_gazebo turtlebot_world.launch

List the topics

rostopic listThe topics that will be listed are

/camera/depth/camera_info

/camera/depth/image_raw

/camera/depth/points

/camera/parameter_descriptions

/camera/parameter_updates

/camera/rgb/camera_info

/camera/rgb/image_raw

/camera/rgb/image_raw/compressed

/camera/rgb/image_raw/compressed/parameter_descriptions

/camera/rgb/image_raw/compressed/parameter_updates

/camera/rgb/image_raw/compressedDepth

/camera/rgb/image_raw/compressedDepth/parameter_descriptions

/camera/rgb/image_raw/compressedDepth/parameter_updates

/camera/rgb/image_raw/theora

/camera/rgb/image_raw/theora/parameter_descriptions

/camera/rgb/image_raw/theora/parameter_updates

/clock

/gazebo/link_states

/gazebo/model_states

/gazebo/parameter_descriptions

/gazebo/parameter_updates

/gazebo/set_link_state

/gazebo/set_model_state

/joint_states

/mobile_base/commands/motor_power

/mobile_base/commands/reset_odometry

/mobile_base/commands/velocity

/mobile_base/events/bumper

/mobile_base/events/cliff

/mobile_base/sensors/imu_data

/odom

/rosout

/rosout_agg

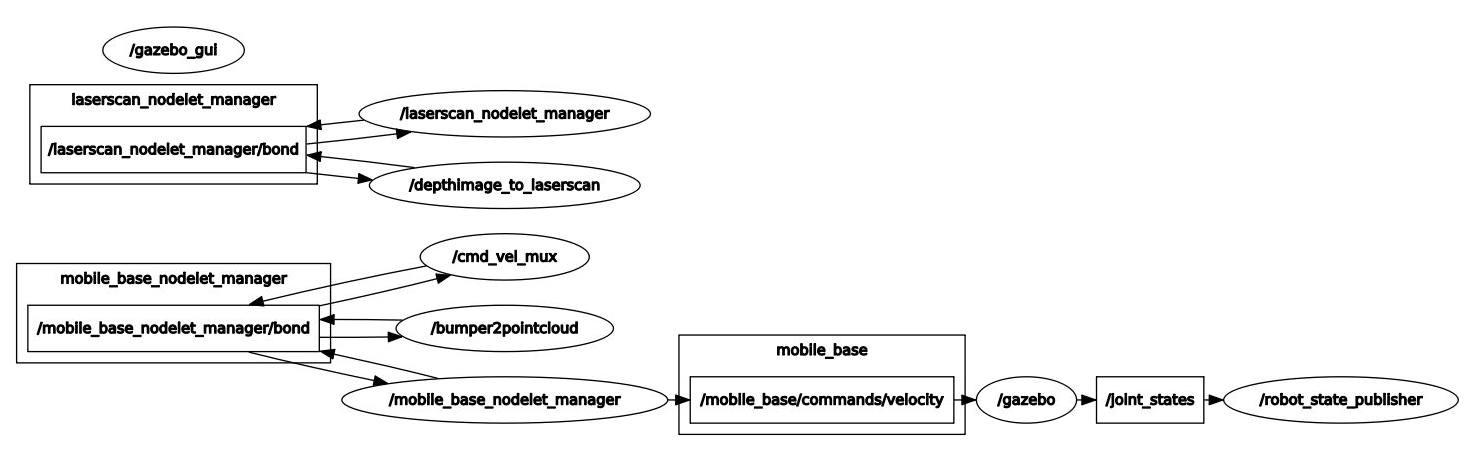

/tfIt is also possible to use rqt graph to visualize the topics of the package.

rosrun rqt_graph rqt_graph

The turtlebot_gazebo node has the following subscribers and publishers that are important for us:

| Subscribers | /cmd_vel_mux/input/teleop | ||

| Publishers | /odom | /camera/depth/image_raw | /mobile_base/sensors/imu_data |

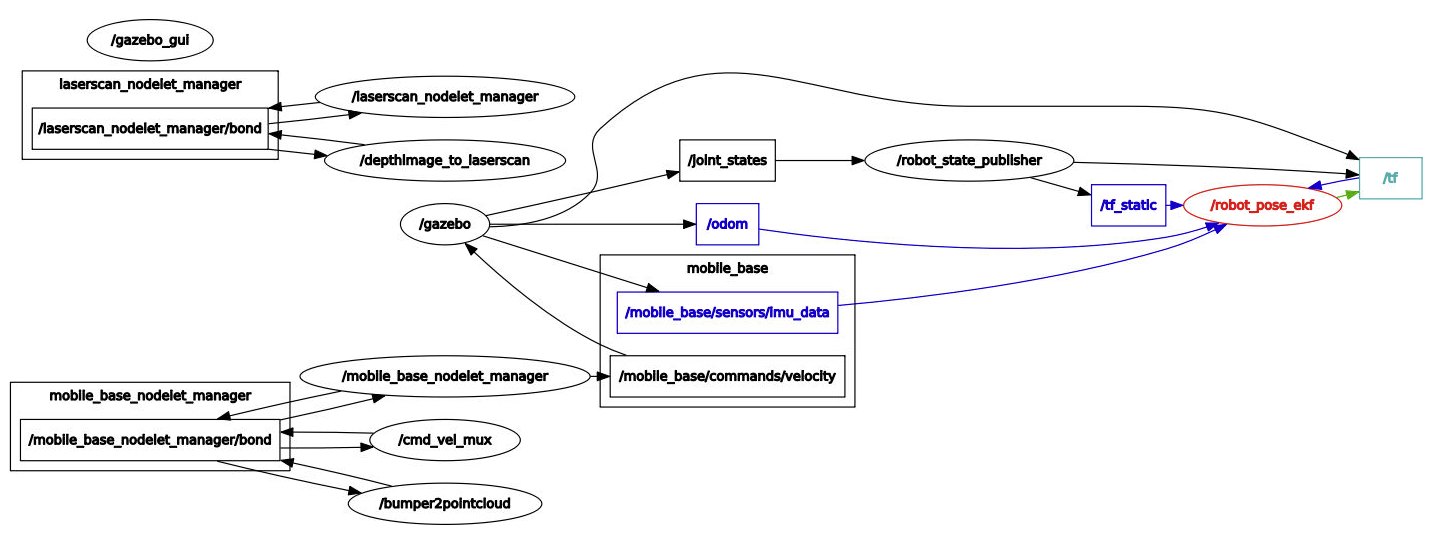

Robot Pose EKF Package

In this section we implement the ekf Kalman filter package to localize the robot’s pose. The documentation of the robot_pose_ekf package shows that the node subscribes to the rotary encoder data through the /odom topic. It is also subscribing to the imu data through the /imu_data topic. Lastly the node is subscribing to three dimensional odometry data through the /vo topic. These sensor data input gets fused by the ekf which results in a filtered and more accurate output pose than one sensor could provide. This combined output is published by ekf node which acts as a publisher. The topic is called /robot_pose_ekf/odom_combined, which is an estimated 3D pose of the robot.

To install the package its git reposityor is cloned into the src directory of the catkin workspace.

cd /home/workspace/catkin_ws/src/

git clone https://github.com/udacity/robot_pose_ekfTo interface the robot_pose_ekf package with the turtlebot_gazebo package and estimate the robots pose it is necessary to rename the following topics to match them. The turtlebot_gazebo package provides a rgbd topic which cannot direclty be used by the robot_pose_ekf package. Also the 3D odometry /vo topic of the ekf package cannot be provided by the turtlebot_gazebo package. Therefore we use and match only the remaining two topics:

| turtlebot_gazebo | robot_pose_ekf |

|---|---|

| /odom | /odom |

| /mobile_base/sensors/imu_data | /imu_data |

These modifications can be achieved by editing the ekf launch file robot_pose_ekf.launch in the src folder of the ekf package in order to turn off the 3D odometry.

<launch>

<node pkg="robot_pose_ekf" type="robot_pose_ekf" name="robot_pose_ekf">

<param name="output_frame" value="odom_combined"/>

<param name="base_footprint_frame" value="base_footprint"/>

<param name="freq" value="30.0"/>

<param name="sensor_timeout" value="1.0"/>

<param name="odom_used" value="true"/>

<param name="imu_used" value="true"/>

<param name="vo_used" value="false"/>

<remap from="imu_data" to="/mobile_base/sensors/imu_data" />

</node>

</launch>Build the package

cd /home/workspace/catkin_ws

catkin_make

source devel/setup.bashLaunch the Node

roslaunch robot_pose_ekf robot_pose_ekf.launchVisualize the topics:

To confirm that the topics of the robot_pose_ekf package and the turtlebot_gazebo package are communicating with each other we check the rqt_graph.

rosrun rqt_graph rqt_graph

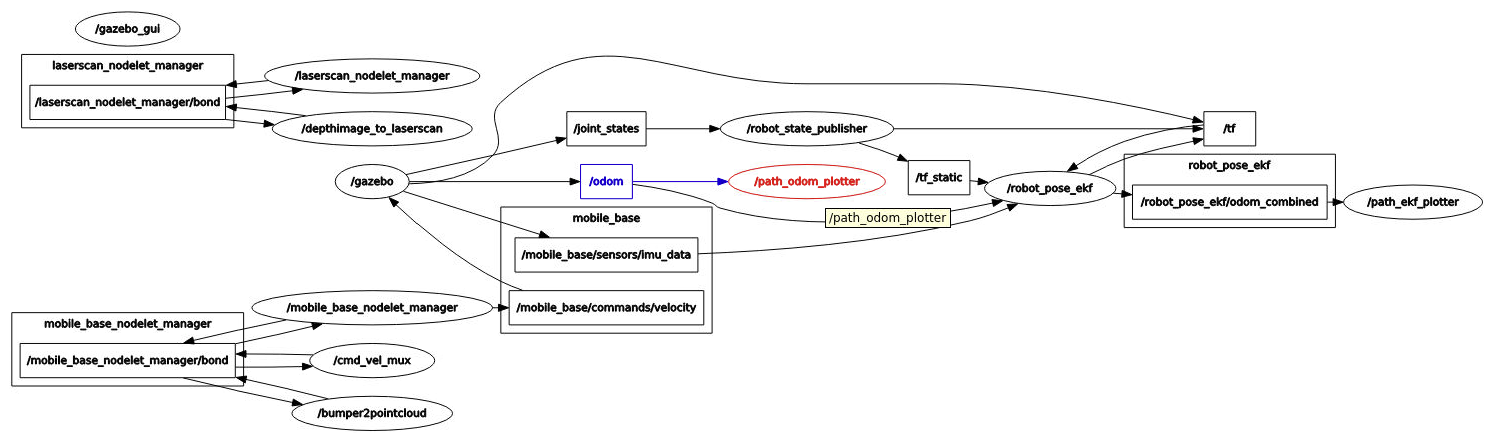

Odometry to Trajectory Package

Currently the turtlebot is publishing the unfiltered pose through the /odom topic and the ekf is publishing the filtered pose via the /robot_pose_ekf/odom_combined topic as the following commands show.

root@pc:/home/workspace/catkin_ws# rostopic info /odom

Type: nav_msgs/Odometry

Publishers:

* /gazebo (http://pc:36747/)

Subscribers:

* /robot_pose_ekf (http://pc:44537/)

root@pc:/home/workspace/catkin_ws# rostopic info /robot_pose_ekf/odom_combined

Type: geometry_msgs/PoseWithCovarianceStamped

Publishers:

* /robot_pose_ekf (http://d7ea9f1e67d3:44537/)

Subscribers: NoneTo compare filtered and unfiltered trajectories it is required to append the timestamped poses, which are generated over time, to a trajectory using another node.

To do this, two trajectories are created using the odometry_to_trajectory ROS package. It subscribes to odometry values and publishes trajectories.

The package contains two nodes, where the first node subscribes to the unfilterd robot poses and appends the last 1000 poses to a trajectory that is then published.

The second node subscribes to the filtered robot pose from the ekf package and publishes also a trajectory using the last 1000 poses.

Install the package

cd /home/workspace/catkin_ws/src

git clone https://github.com/udacity/odom_to_trajectoryBuild the package

cd /home/workspace/catkin_ws

catkin_make

source devel/setup.bashLaunch the Node

roslaunch robot_pose_ekf robot_pose_ekf.launchVisualize the topics:

To confirm that the topics of the robot_pose_ekf package and the turtlebot_gazebo package are communicating with each other we check the rqt_graph.

rosrun rqt_graph rqt_graph

When we show only the active nodes and topics, we see now that the /robot_pose_ekf/odom_combined topic is publishing to the subscribing /path_plotter_ekf node.

TurtleBot Teleop Package

Currently the robot is standing still which results in trajectories with zero values and the robot is only performing sensor updates without motion updates. To get more interesting trajectory data from the previously mentioned node, the robot needs to move and collect further sensory information. To move the robot we use the turtlebot_teleop package, which lets you control a robot using the keyboard or a joystick by publishing driving commands.

The properties of this package does not subscribe to a topic. It only publishes control commands to the /cmd_vel_mux/input/teleop topic.

This topic is the same to which the turtlebot_gazebo package is subscribing.

To let the turtlebot_gazebo package subscribe to the turtlebot_teleop package via the /cmd_vel_mux/input/teleop topic, the new node needs to be launched.

This makes it possible to drive the robot around in the gazebo environment and create trajectories which can be compared.

Install the package

cd /home/workspace/catkin_ws/src

git clone https://github.com/turtlebot/turtlebotInstall the dependencies

cd /home/workspace/catkin_ws

source devel/setup.bash

rosdep -i install turtlebot_teleopBuild the package

cd /home/workspace/catkin_ws

catkin_make

source devel/setup.bashLaunch the Node

roslaunch turtlebot_teleop keyboard_teleop.launchNow it is possible to move the robot around using the keyboard commands. However, make sure that the terminal running the teleop node is active and receiving the keyboard commands.

Control Your Turtlebot!

---------------------------

Moving around:

u i o

j k l

m , .

q/z : increase/decrease max speeds by 10%

w/x : increase/decrease only linear speed by 10%

e/c : increase/decrease only angular speed by 10%

space key, k : force stop

anything else : stop smoothlyRviz Package

Rviz is used to visualize the robot data and helpful for debugging. An introduction can be found in the user guide. To display the data of the nodes that are running we run rviz.

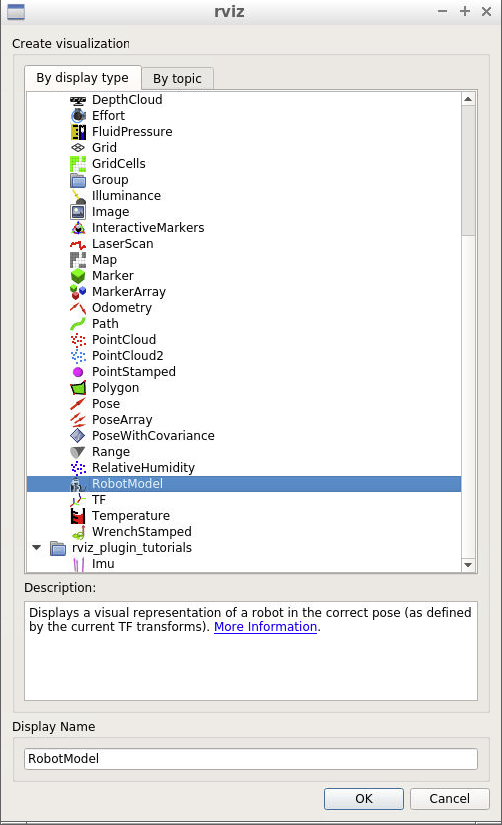

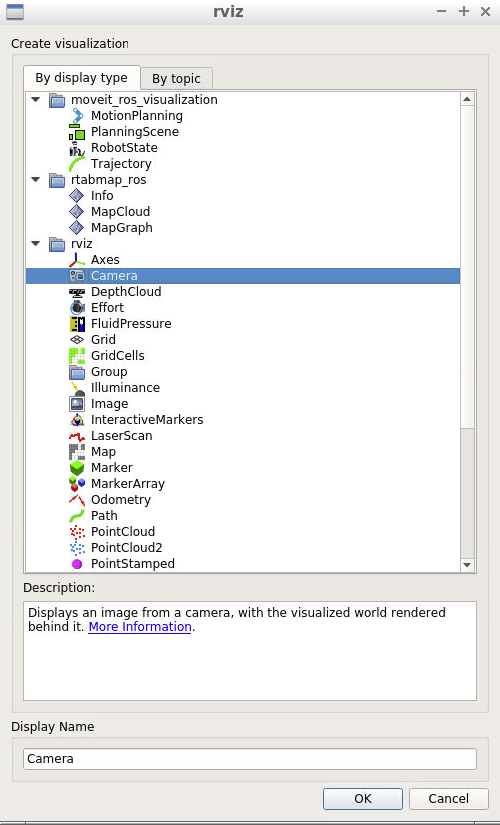

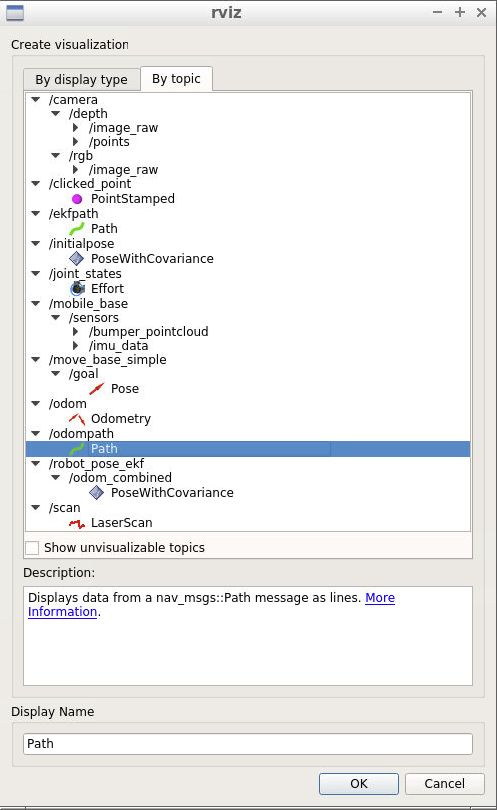

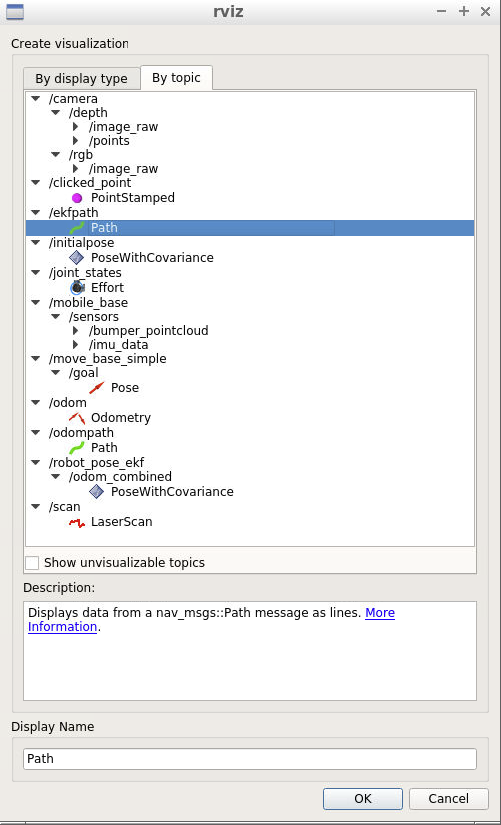

rosrun rviz rvizAfter rviz runs, its configuration needs to be configured.

- Change the Fixed Frame to

base_footprint - Change the Reference Frame to

odom - Add a robot model

- Add a camera and select

/camera/rgb/image_rawtopic - Add a

/ekfpathtopic and change the display name to EKFPath - Add a

/odomtopic and change the display name to OdomPath and its color to red(255,0,0)

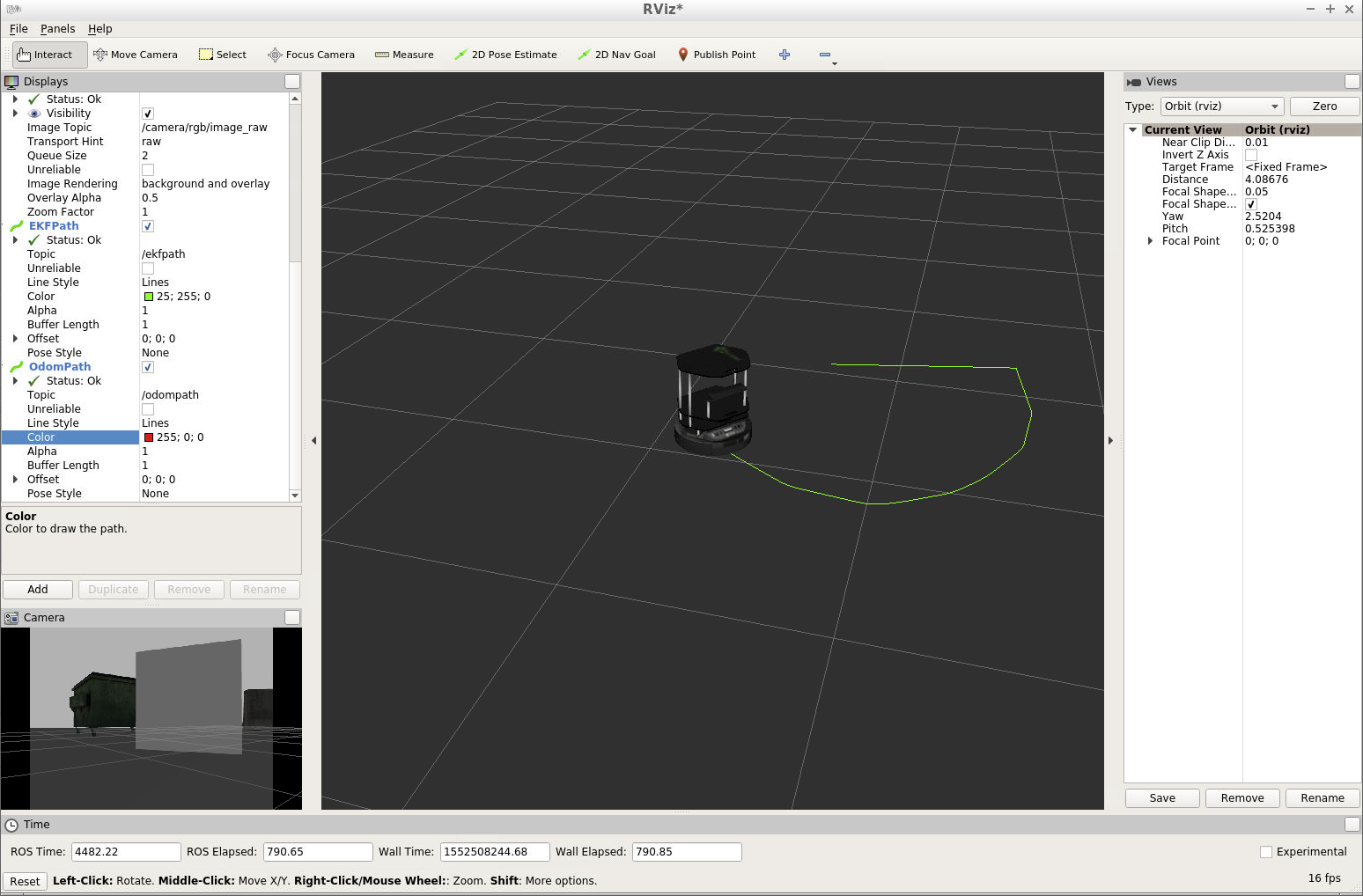

Completing these steps will result in the following rviz configuration when the robot is controlled by the turtlebot_teleop node.

Save this rviz configuration to avoid repeating the steps above.

You can save it for example in the src folder of your catkin workspace as TurtleBotEKF.rviz.

To test if the saved configuration works, kill the rviz terminal and relaunch rviz with the following parameter.

rosrun rviz rviz -d /home/workspace/catkin_ws/src/TurtleBotEKF.rvizIt is also possible to use a launch file in order to run this rviz configuration using roslaunch.

<launch>

<!--RVIZ-->

<node pkg="rviz" type="rviz" name="rviz" args="-d /home/workspace/catkin_ws/src/TurtleBotEKF.rviz"/>

</launch>Main Launch

The created launch file can be integrated together with the launch files of the other nodes in a main launch file main.launch.

This simplifies the process of launching all the created nodes in the mentioned packages. Instead of launch them individually in seperate terminals it is sufficient to lauch the main launch file.

To achieve this, we create a main package that contains the main.launch.

A new ROS package can be created with the catkin_create_pkg command.

Create a main package

/home/workspace/catkin_ws/src

catkin_create_pkg mainBuild the package

/home/workspace/catkin_ws

catkin_make Create and edit the main.launch file

cd /home/workspace/catkin_ws/src/main

mkdir launch

cd launch

vim main.launchUse the content for the main.lauch file from Udacity’s GitHub repository or copy it below.

<launch>

<!--Robot Pose EKF Package -->

<!-- The path_ekf_plotter node -->

<node name="path_ekf_plotter" type="path_ekf_plotter.py" pkg="odom_to_trajectory">

</node>

<!-- The path_odom_plotter node -->

<node name="path_odom_plotter" type="path_odom_plotter.py" pkg="odom_to_trajectory">

<!--RobotPose EKF package-->

</node>

<node pkg="robot_pose_ekf" type="robot_pose_ekf" name="robot_pose_ekf">

<param name="output_frame" value="odom_combined"/>

<param name="base_footprint_frame" value="base_footprint"/>

<param name="freq" value="30.0"/>

<param name="sensor_timeout" value="1.0"/>

<param name="odom_used" value="true"/>

<param name="imu_used" value="true"/>

<param name="vo_used" value="false"/>

<remap from="imu_data" to="/mobile_base/sensors/imu_data" />

</node>

<!-- TurleBot Gazzebo-->

<arg name="world_file" default="$(env TURTLEBOT_GAZEBO_WORLD_FILE)"/>

<arg name="base" value="$(optenv TURTLEBOT_BASE kobuki)"/> <!-- create, roomba -->

<arg name="battery" value="$(optenv TURTLEBOT_BATTERY /proc/acpi/battery/BAT0)"/> <!-- /proc/acpi/battery/BAT0 -->

<arg name="gui" default="true"/>

<arg name="stacks" value="$(optenv TURTLEBOT_STACKS hexagons)"/> <!-- circles, hexagons -->

<arg name="3d_sensor" value="$(optenv TURTLEBOT_3D_SENSOR kinect)"/> <!-- kinect, asus_xtion_pro -->

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<arg name="use_sim_time" value="true"/>

<arg name="debug" value="false"/>

<arg name="gui" value="$(arg gui)" />

<arg name="world_name" value="$(arg world_file)"/>

</include>

<include file="$(find turtlebot_gazebo)/launch/includes/$(arg base).launch.xml">

<arg name="base" value="$(arg base)"/>

<arg name="stacks" value="$(arg stacks)"/>

<arg name="3d_sensor" value="$(arg 3d_sensor)"/>

</include>

<node pkg="robot_state_publisher" type="robot_state_publisher" name="robot_state_publisher">

<param name="publish_frequency" type="double" value="30.0" />

</node>

<!-- Fake laser -->

<node pkg="nodelet" type="nodelet" name="laserscan_nodelet_manager" args="manager"/>

<node pkg="nodelet" type="nodelet" name="depthimage_to_laserscan"

args="load depthimage_to_laserscan/DepthImageToLaserScanNodelet laserscan_nodelet_manager">

<param name="scan_height" value="10"/>

<param name="output_frame_id" value="/camera_depth_frame"/>

<param name="range_min" value="0.45"/>

<remap from="image" to="/camera/depth/image_raw"/>

<remap from="scan" to="/scan"/>

</node>

<!--RVIZ-->

<node pkg="rviz" type="rviz" name="rviz" args="-d /home/workspace/catkin_ws/src/EKFLab.rviz"/>

<!--Use this insted if you are cloning the whole repo in your src-->

<!--<node pkg="rviz" type="rviz" name="rviz" args="-d /home/workspace/catkin_ws/src/RoboND-EKFLab/EKFLab.rviz"/>-->

<!--Turtlebot Teleop-->

<node pkg="turtlebot_teleop" type="turtlebot_teleop_key" name="turtlebot_teleop_keyboard" output="screen">

<param name="scale_linear" value="0.5" type="double"/>

<param name="scale_angular" value="1.5" type="double"/>

<remap from="turtlebot_teleop_keyboard/cmd_vel" to="cmd_vel_mux/input/teleop"/>

</node>

</launch>Launch the main.launch file

cd /home/workspace/catkin_ws/

source devel/setup.bash

roslaunch main main.launchRqt Multiplot

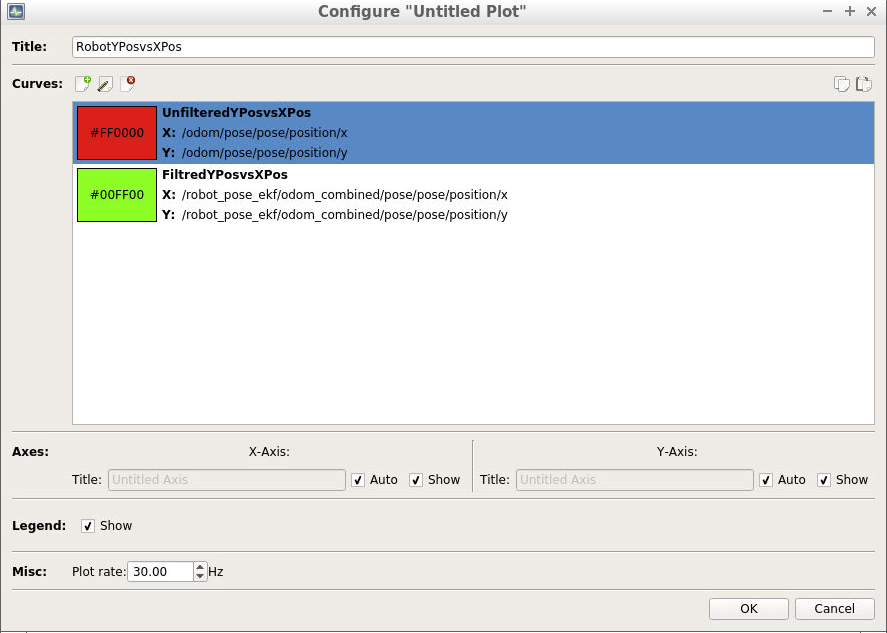

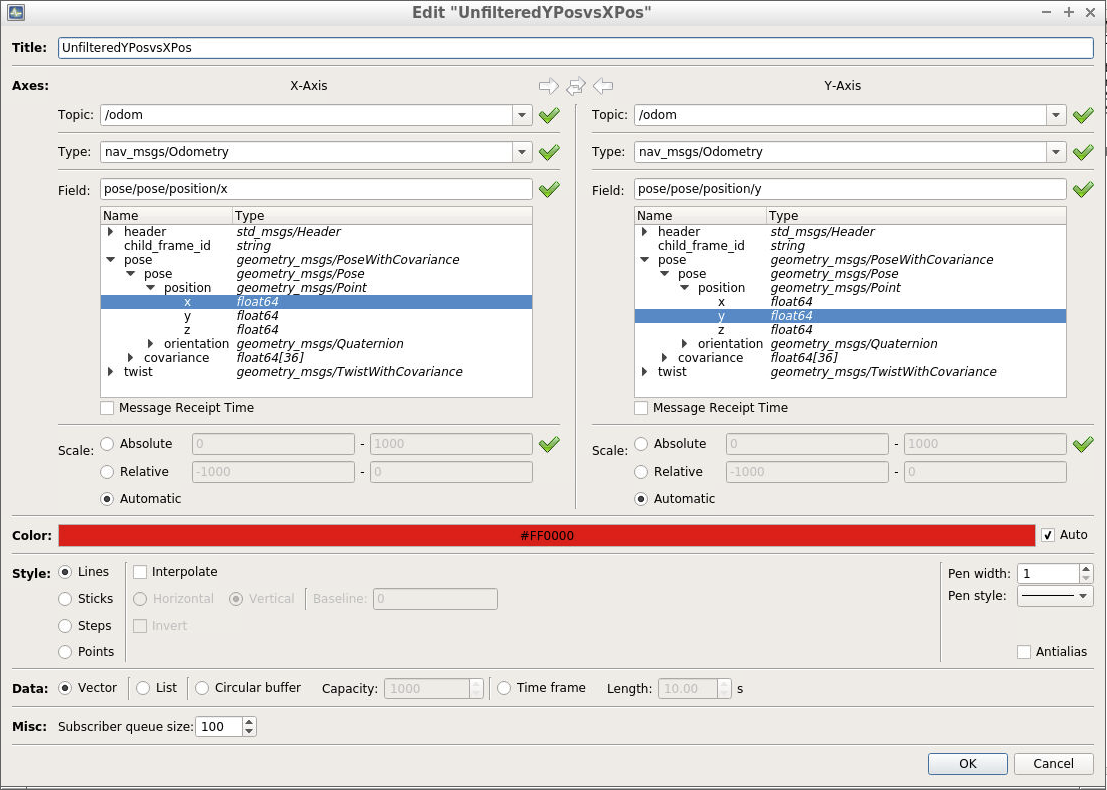

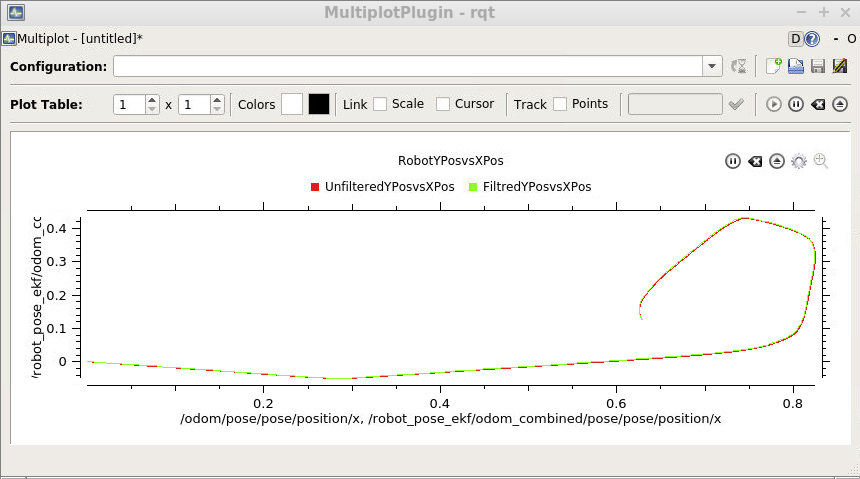

So far it is possible to move the robot using the turtlebot_teleop node, publish the unfiltered odometry and filtered trajectories using the ekf package.

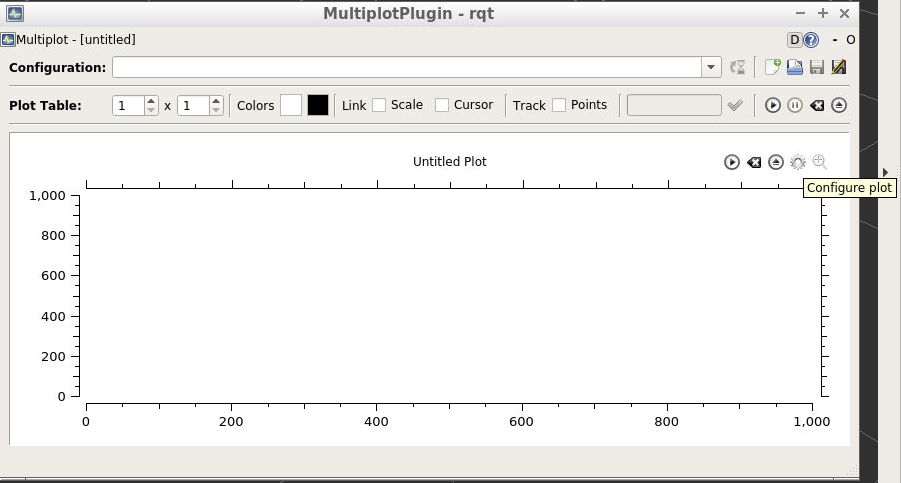

To visualize how close the estimated pose of the ekf is to the unfiltred odometry trajectory we use rqt multiplot from ROS. This is an additional package that needs to be installed.

Note, that ROS comes with plotting package rqt_plot which can graph values that are echoed by different topics. However, these signals will be only plotted over time.

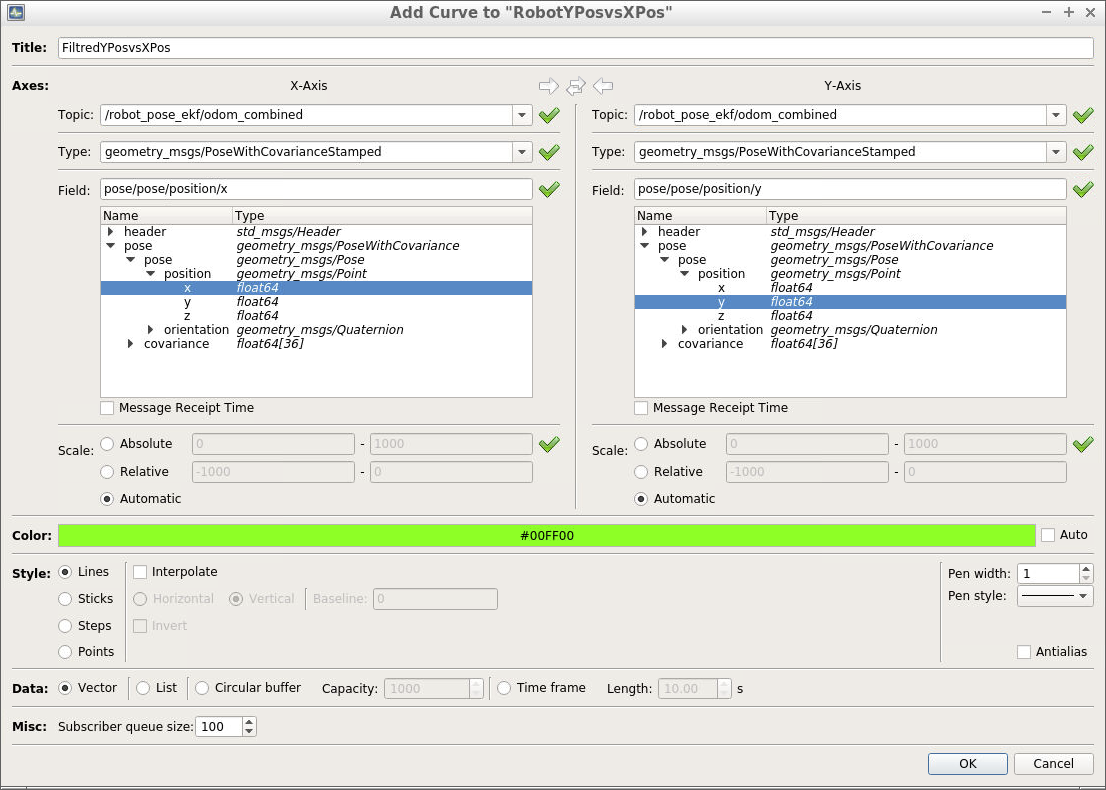

To get more plotting capability, such as plotting the y position over the x position, rqt_multiplot is required.

apt-get install ros-kinetic-rqt -y

apt-get install ros-kinetic-rqt-multiplot -y

apt-get install libqwt-dev -y

rm -rf ~/.config/ros.org/rqt_gui.iniThen run the rqt_multiplot package node.

rosrun rqt_multiplot rqt_multiplotFollow the steps below to add two plots. One will show the unfiltered y position over the x position. The other plot will show the filtered y(x) position from the ekf package.

By default the graph plotting is paused. Simply press play in rqt_multiplot and move the robot around. Now it is possible to visualize how close the odometry trajectory is to the filtered trajectory produced by the ekf.

Another comparison that is left open is to compare the filtered and unfiltered data with percise reference sensor, such as differential gps.

Summary

This concludes the Udacity Kalman Filter Lab. We’ve estimated the robot’s pose with an extended Kalman filter ROS package.

That was done by remapping some publisher and subscriber topics and establishing a communication between nodes of different packages.

To simplify the launch, all of the launch files were grouped into a single main.launch file, that can be launched from the terminal.

Access the EKF Lab in the Udacity GitHub repository.

Links

Reference

This post is a summary of the EKFLab from the Robotics Nanodegree of Udacity found here

Comments